Seminar on Shared Perception and Explainable AI in HRI

Let us cordially invite you to a hybrid seminar on shared perception and explainable AI in HRI with two speakers, Dr. Omar Eldardeer and Dr. Marco Matarese from the TERAIS’ partner institution Italian Institute of Technology (IIT) in Genoa, Italy will give lectures on their research. The seminar will be held on

Friday 11.4.2025 at 11:30 in room I-9 at FMPI UK as well as online via

Dr. Omar Eldardeer

Omar Eldardeer is a postdoctoral researcher at the Italian Institute of Technology in COgNiTive Architecture for Collaborative Technologies (CONTACT) unit. His research focuses on building cognitive architectures for perception, learning, and interaction for robots with the human in the loop. Typically in interaction or collaboration scenarios. He earned his B.Eng. in Electrical Energy Engineering from Cairo University, Egypt. Followed by an M.Sc. in Artificial Intelligence and Robotics from the University of Essex, UK with a thesis about multi-modal spatial awareness for robots at home. He then obtained his Ph.D. in Bioengineering and Robotics from the University of Genoa and the Italian Institute of Technology in the Robotics, Brain, and Cognitive Sciences (RBCS) unit, where he explored audio-visual cognitive architectures for human-robot shared perception. During his Ph.D. and postdoctoral research, Omar Eldardeer contributed to VOJEXT and APRIL EU projects and was a visiting researcher at the University of Essex, the University of Lethbridge, and the University of Bremen at different periods. Omar Eldardeer is now leading a work package about “Achievement of Scientific Excellence Leader” within the TERAIS project.

Talk: Towards Human-Robot Shared Perception

With the increasing presence of robots in our daily lives, the need for seamless human-robot collaboration has become more critical than ever. A fundamental aspect of this collaboration is shared perception—the ability for both humans and robots to develop a mutual understanding of their environment to support effective joint action. In this talk, we explore the theoretical foundations necessary for achieving shared perception, discussing key requirements.

Furthermore, we delve into biologically inspired cognitive architectures that enhance robotic perception, drawing insights from human cognition to improve how robots interpret, learn from, and respond to dynamic environments. By integrating principles from neuroscience and artificial intelligence, these architectures pave the way for more intuitive and natural human-robot interactions. Through this discussion, we aim to highlight the challenges, opportunities, and future directions in the quest for more intelligent and collaborative robotic systems.

Dr. Marco Matarese

Marco Matarese is a postdoctoral researcher at the Italian Institute of Technology (IIT) and a research fellow at the University of Naples Parthenope. He obtained my Ph.D. at the University of Genoa (DIBRIS) and the Italian Institute of Technology, and a Bachelor’s and MSc degrees in Computer Science at the University of Naples Federico II. During his PhD, he investigated the influence of the explainability of social robots on human-robot collaboration and tutoring. He collaborated with Prof. Katharina Rolhfing from Paderborn University and the TRR 318 “Constructing Explainability” during my PhD period abroad, working on partner-aware explainable artificial intelligence (XAI) in tutoring with social agents. Previously, worked on robots’ behavioral modeling and human-robot shared perception. He gave several invited talks at top institutions, including the University of Bielefeld (CITEC) and the International Symposium “Humans at the Centre of HRI” by Naver Labs Europe and Inria. For my MSc thesis, a research work conducted in conjunction with the Italian Institute of Technology, he received the Best MSc Thesis Award from Istituto di Biorobotica - Scuola Superiore S. Anna, Pisa.

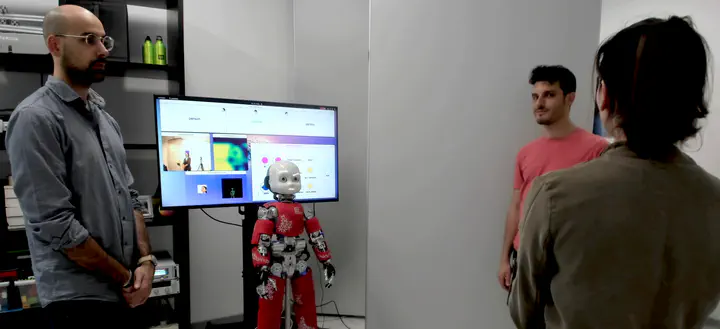

Talk: Friend or Foe? The Role of Socially Skilled Robots in Explainable AI

As artificial intelligence (AI) systems become more prevalent in high-stakes decision-making and collaborative environments, the explainability of such systems remains a critical challenge. Integrating social skills into explainable AI (XAI) represents a promising step toward more effective human-AI collaboration. Socially capable robots can enhance trust, engagement, and comprehension by delivering explanations in ways that align with human cognitive and social processes.

In this talk, we examine the potential of socially skilled robots in XAI by highlighting the positive effects of social explanations on human-robot collaboration. By leveraging natural communication patterns, such as verbal and non-verbal cues, these systems can facilitate better decision-making and performance in complex tasks.

However, we also explore the potential downsides of explainable artificial agents-assisted training since implementing such agents is not without risks. Excessive reliance on AI explanations can reduce learning and problem-solving efforts, fostering user complacency and diminishing long-term learning outcomes. Additionally, social reciprocity mechanisms may lead users to accept explanations uncritically, even when flawed or misleading.

By examining these dynamics, we aim to foster a discussion on how to design AI systems that balance clarity, engagement, and user autonomy, ensuring that explanations support rather than hinder human learning and decision-making.